# Specification Driven Testing

WARNING

This feature is available as part of vREST NG Pro/Enterprise version. Some features like Request Validation are introduced in vREST NG v2.3.0.

In this guide, we will see how you may derive your entire API testing using the API specification file written in Swagger/OpenAPI format.

# Contents

- What is specification driven testing?

- Benefits of specification driven testing

- Defining an API specification file

- Generating specification driven API test case(s)

- Validating API response structure through API Specification schema references

- Validating API request structure through API specification file

- Maintaining specification driven API tests

- Running specification driven API tests

- API coverage reports

- Sample Project to demonstrate specification driven testing in vREST

- Current Limitations/Known issues

# 1. What is specification-driven testing?

In specification-driven testing, we majorly focus on our API specification file (Swagger/OpenAPI/... format) and keep it in sync with our latest API implementation.

In vREST, we first define our API specification file and then we generate/define our API tests using the specified specification file. This API specification will act as a single source of truth for our API tests. In the future, if there are any changes in the API specifications then we are able to quickly detect/fix our API tests using the updated API specifications.

This approach can be combined with data-driven testing methodology to get the best of both worlds.

# 2. Benefits of specification driven testing

You will get the following benefits when you implement the specification-driven API testing methodology for your API automation in vREST.

Bring more clarity to the automation process: Defining API specification files at an early stage will help all the teams (Backend, Frontend, Testing, ...) to do their respective jobs without much communication. The testing team is fully aware of the request and response structure of each API your backend supports. Otherwise, a lot of effort is spent in finding out the exact request and response structure of API, and also this manual process leads to multiple errors and communication overload among teams.

Allow automatic generation of API Tests: Defining the API specification file will help you to generate API test(s) quickly with just a button click. This will save enormous efforts spent using the manual process and also prevents the errors that can occur through the manual process. It also helps you to validate the request and response structure of APIs in tests using the structure defined in the API specification file.

Single source of truth: API specification files act as a single source of truth for your API tests. Your API tests will always comply with the latest API specification format.

Easier Maintenance: As you can validate your API tests' request and response structure using the API specification file. Now, if API specification changes in the future, then API tests response structure validation will be automatically fixed because vREST will always validate the API test's responses using the updated API specification file. And for the request part, vREST will help you find out the API test cases which need fixes.

# 3. Defining an API specification file

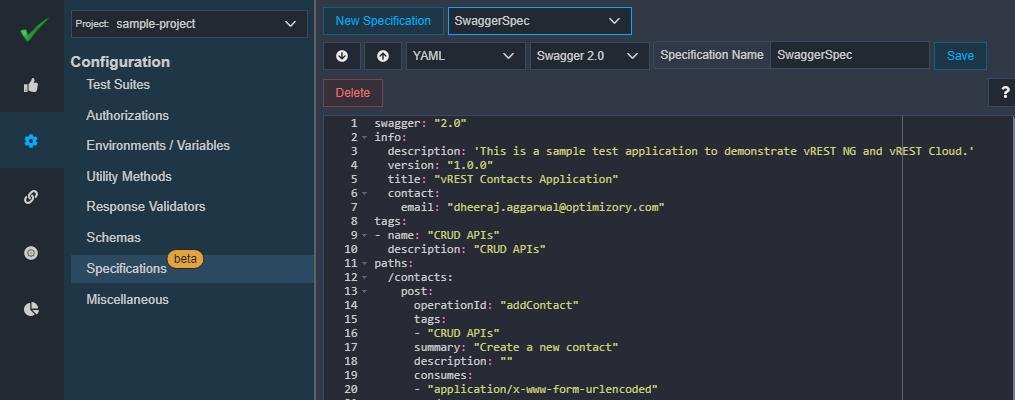

To define the API specification, simply visit the Configuration Tab >> Specifications section. And now click on the New Specification button and provide the following information:

- Specification type: You may provide either Swagger 2.0 or OpenAPI 3.0 type specifications. So select the appropriate value from the dropdown. Please contact us (opens new window) if you are using any other API specification format in your project.

- Data format: You may provide the API specification content either in JSON or YAML format. Choose the appropriate format at your convenience.

- Specification name: Provide a suitable name for this specification.

- Specification content: In the code editor, provide the API specification file content.

Now, simply click on the Save button to save this specification in the vREST project. Make sure, you have specified a unique operationId in each API operation in the specification file (see line #14 in the above screenshot). vREST uses this field to bind API tests with the API spec operations defined in the API specification file. So this field is mandatory to generate API tests in the next step.

# 4. Generating specification driven API test case(s)

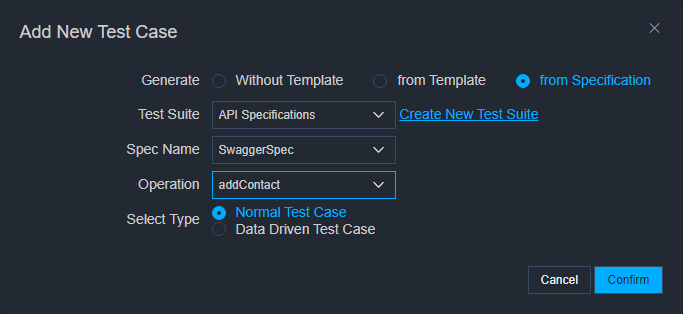

Once you have defined the API specification file as shown in the previous step. Now let's try to generate a test from the specification. To create a test, simply visit the Test cases tab and click on the + icon in the toolbar to create your test.

A dialog window will appear. In the dialog window, simply select the Generate option to from Specification. And now the system asks for Test Suite Name, Spec Name, Operation.

Simply select the Spec Name from the list and then select the desired operation for which you would like to generate the test. Further system will ask you the following test case type:

- Normal Test Case

- Data Driven Test Case

Select a data-driven test case if you would like to generate a data-driven API test, otherwise, select the normal test case option. If you choose the Data-Driven Test Case option then vREST also generates the CSV file structure for you and binds the CSV file and columns with the API test case automatically.

Now click on the Confirm button to generate the test. Now, let's look at some of the information generated in the API test.

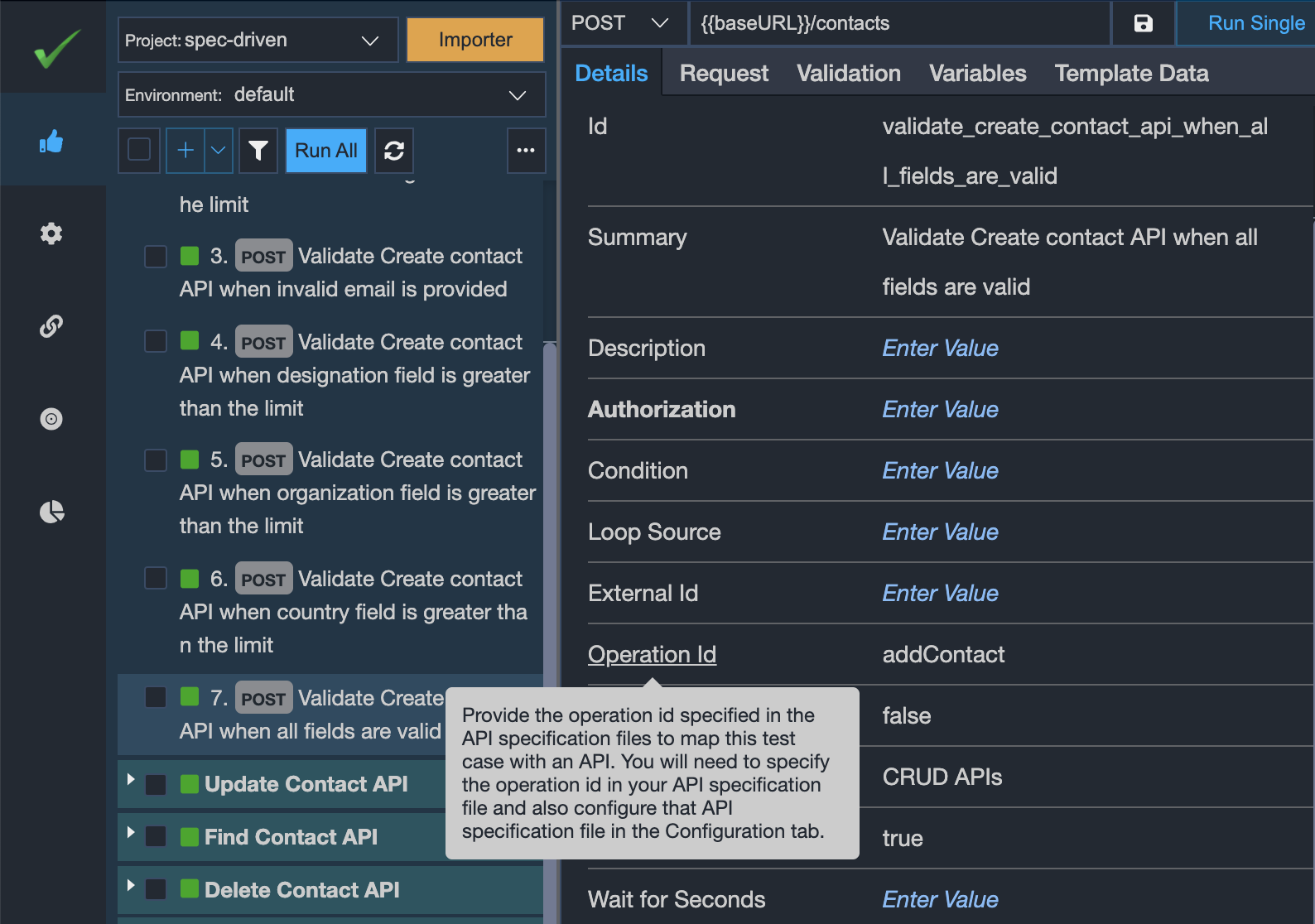

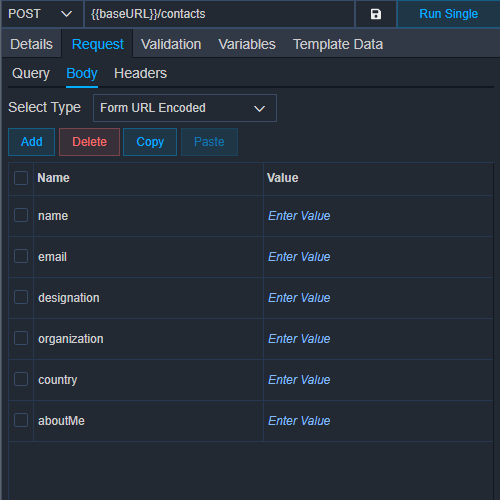

The following screenshots illustrate the generated API test through the Normal Test Case option. vREST automatically picks the request method, URL, request parameters (query, form, header), and request body to generate the API test case and it also adds the assertions to validate the test case. It also binds the test case using the Operation Id field in the details tab with the specific API specification operation as shown in the screenshot below.

The following screenshot illustrates the request parameters generated from API specification:

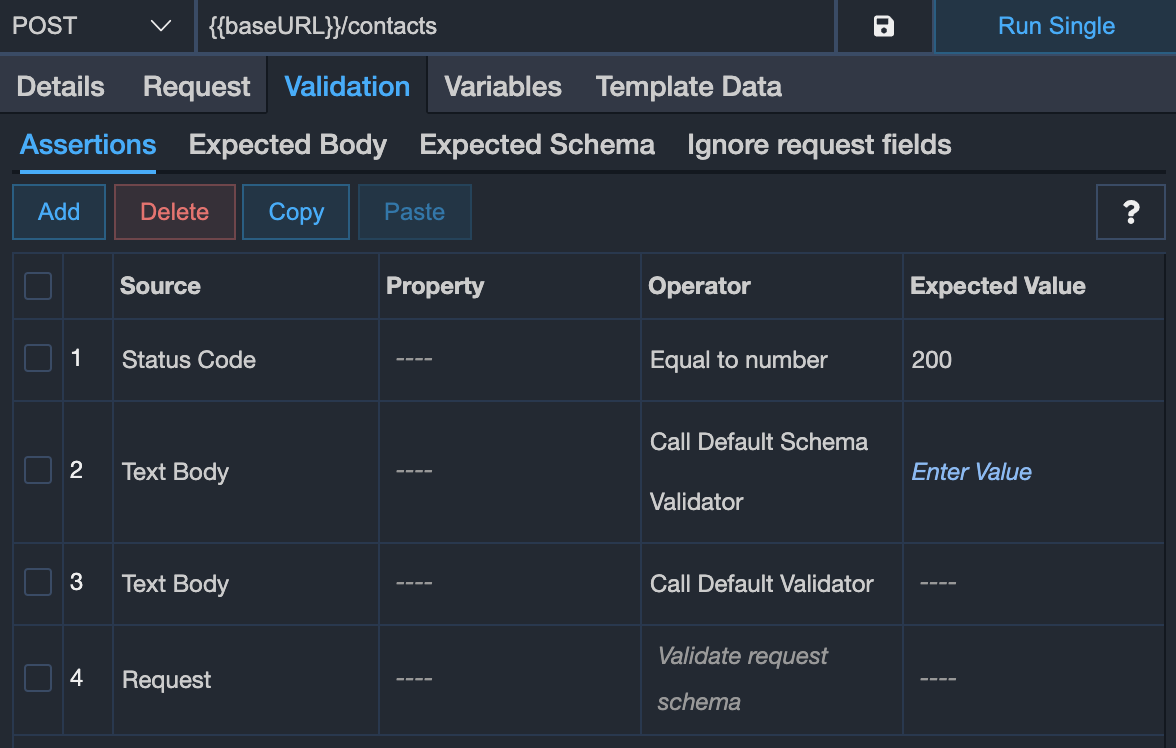

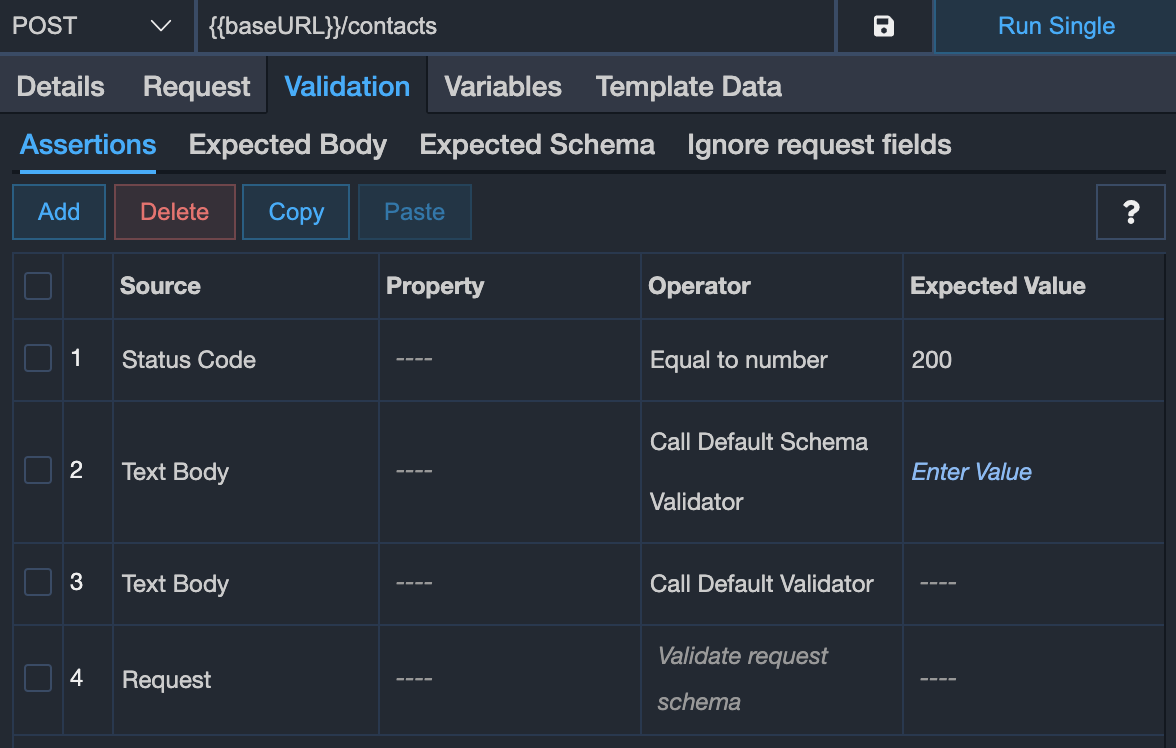

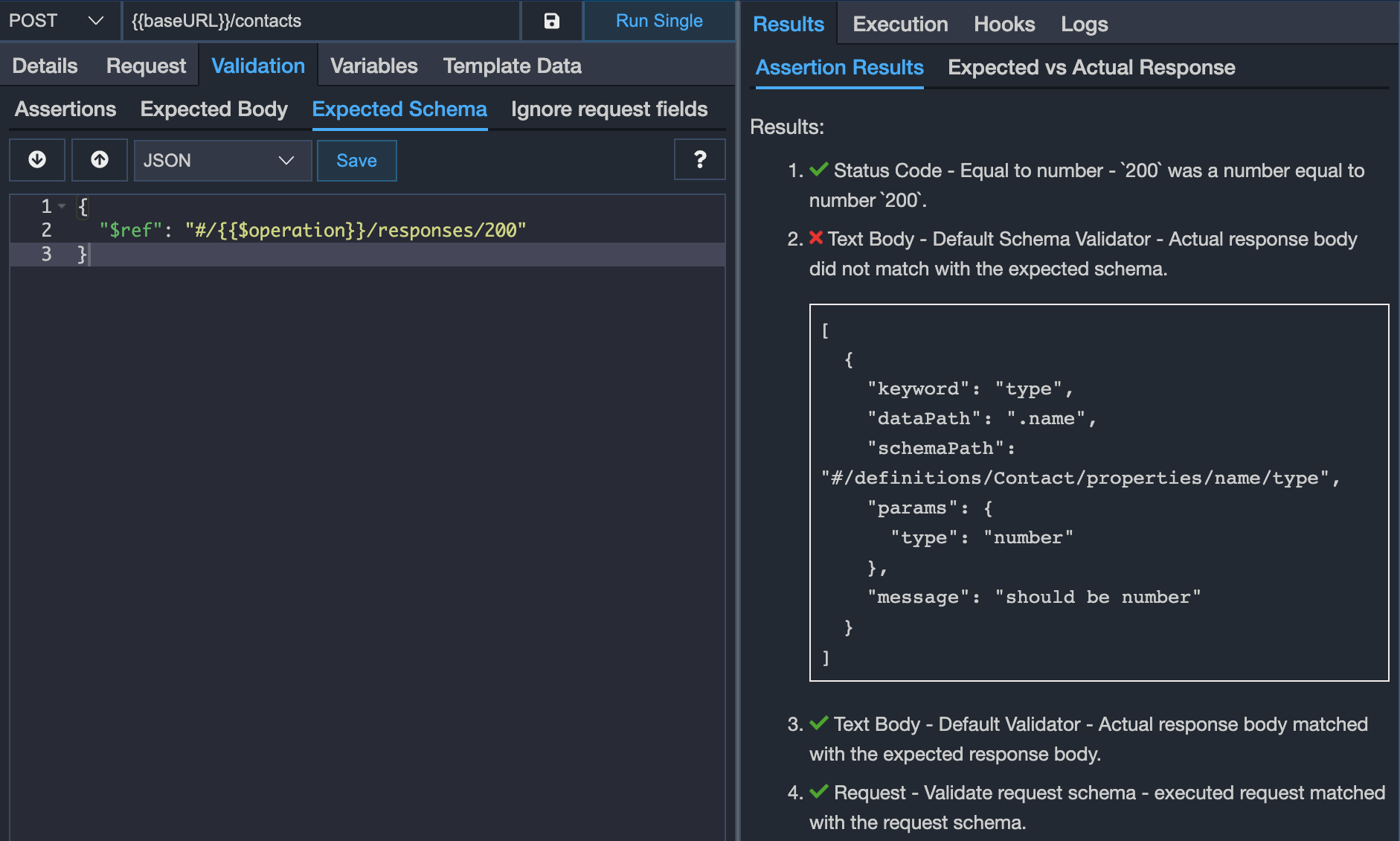

The following screenshot illustrates the validation logic generated from API specification:

In the above screenshot, the first assertion validates the status code against the expected status code defined. The second assertion validates the API response structure against the schema defined in the Swagger/OpenAPI specification. The referred schema used for this assertion is defined in the Expected Schema tab as shown in the next screenshot and the third assertion validates the API request structure against the structure defined in the API specification.

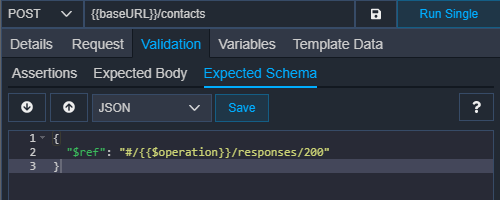

And the following screenshot illustrates the generated Expected Schema Tab content. Here {{$operation}} is a special variable that contains the value of the operation id linked in this test case. So, here we are validating the response structure of API response against the response structure defined in the API specification (responses list >> 200).

# 5. Validating API response through API Specification schema references

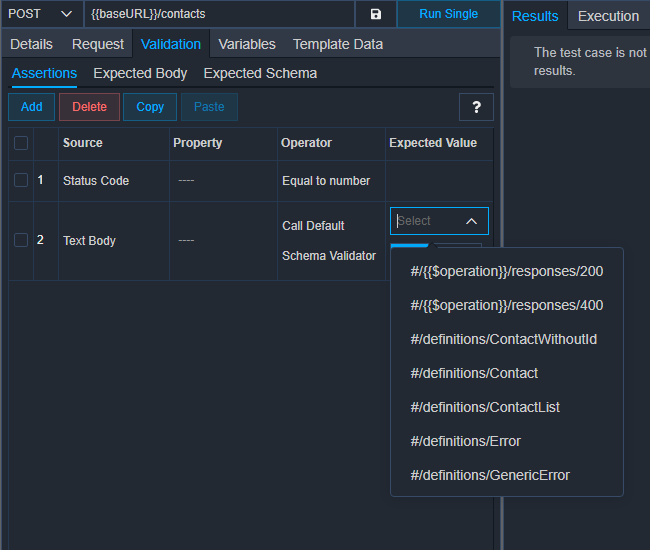

Now, let's see how you may validate your API response through the schema references defined in the API specification file. For that purpose, simply open the Validation >> Assertions tab. Now add a Text Body assertion that calls the Default Schema Validator. And in the Expected Value column, you may select the desired schema reference. You may even define this reference in the Expected Schema tab as we have seen in our previous step.

Please note that if you define the value in the Expected Value column of the Assertions tab, then the Expected Schema tab content will be ignored.

# 6. Validating API request structure through API specification file

Now, let's see how you may validate your API request structure using our API specification file. If you have linked any test case using the Operation Id field with any of the API specification operations then you will be able to add an assertion with source Request in the Validation >> Assertions tab.

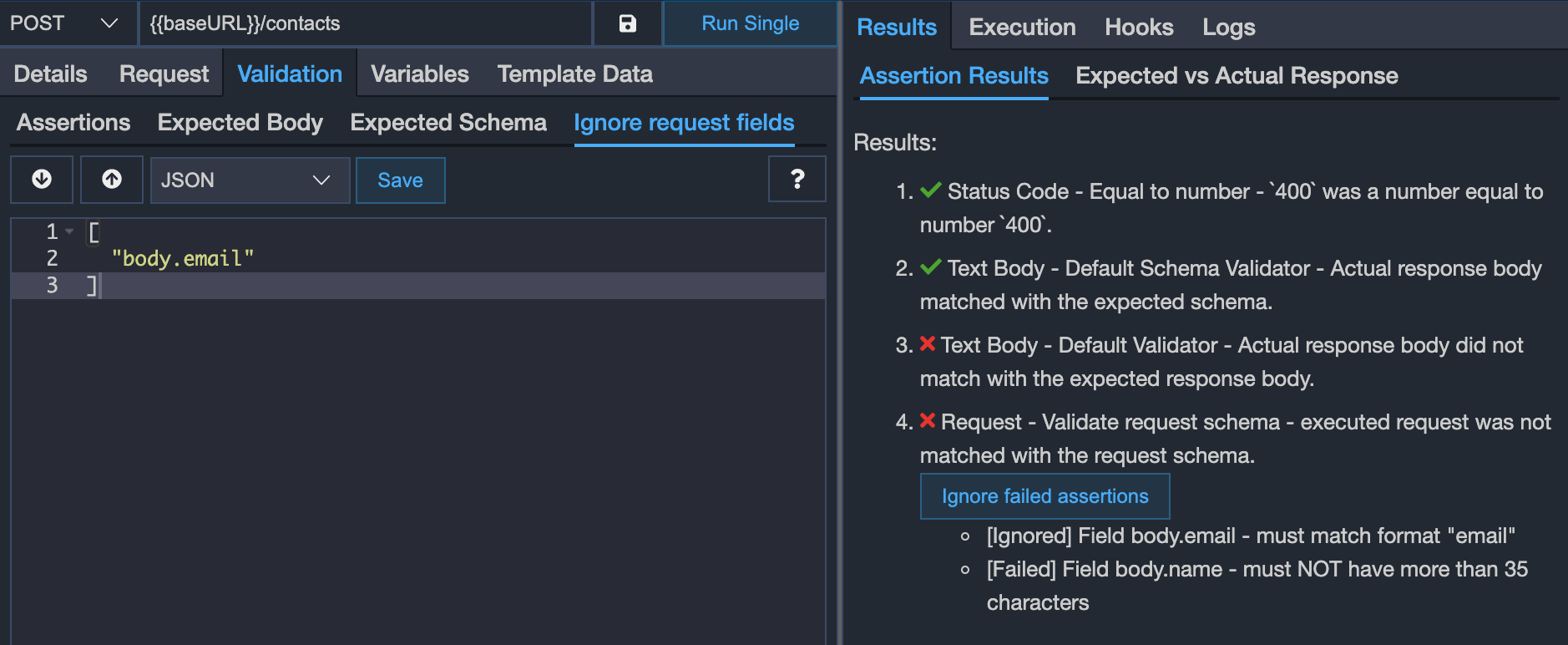

It will help you to quickly detect the API tests where the request structure goes out of sync with API specifications. In your API test, you are also allowed to ignore certain request fields during validation check, so that you may check erroneous conditions in your API tests.

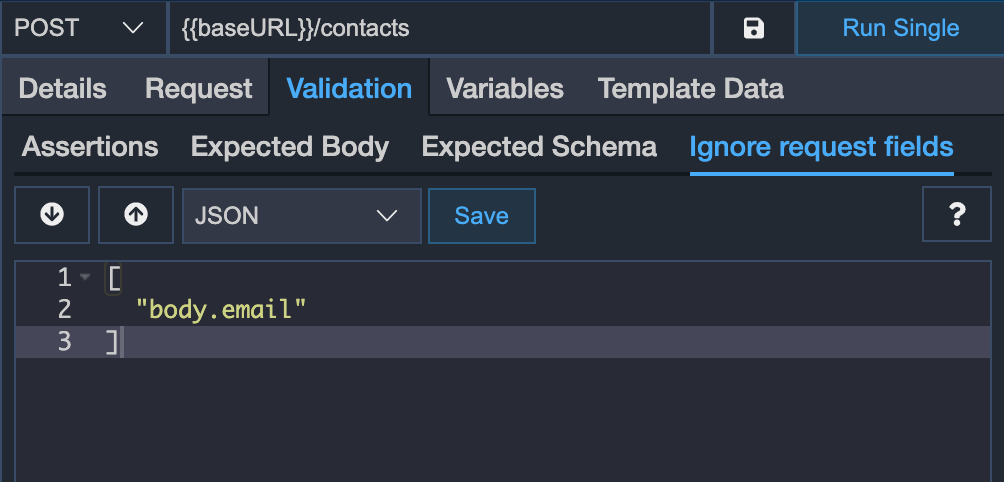

In the Validation >> Ignore request fields tab of a test case, simply specify an array of request fields which you would like to ignore during request validation of a test case. You may also specify a variable (value must be an array or empty string) here as well. Variable such as "{{data.$.ignoreFields}}" will help you fetch the fields from a CSV/XLSX file (having ignoreFields column).

To ignore various kinds of request fields, follow the patterns below:

path.FIELD_NAME: This is to ignore the path parameter field.query.FIELD_NAME: This is to ignore the query parameter field.body.FIELD_NAME: This is to ignore the JSON body field or form parameter. You may even ignore a nested JSON field by using the syntaxbody.ROOT_KEY.NESTED_FIELD.FIELD_NAME.

To ignore the specific item in the array fields, you may use syntax like query.FIELD_NAME.0 or body.FIELD_NAME.0, body.FIELD_NAME.1 etc.

So, the example value, which you may set is as follows:

[

"path.recordId",

"query.sort",

"body.name"

]

An example screenshot where we have ignored the email field in form parameters. So for this test case request, the structure of all the request fields will be checked except the email field.

# 7. Maintaining specification driven API tests

Maintenance is the major issue in API testing. Many API test automation fails just due to this issue. In vREST, maintaining API tests is quite simple. For this, just update the API specification file in vREST and run the API tests again. vREST will automatically let you know which API tests need fixes and provide you with the exact reasons why a particular API test fails. And also you will be able to fix those issues quickly as well.

Normally an API test will need fixes due to the following conditions:

Change in request data: For this, vREST allows you to validate the request structure against the OpenAPI/Swagger API operation. So, if your API specification file changes then vREST will automatically let you know the faulty tests that need fixes. In this way, your API tests will remain in sync with your API specification always.

Change in API response content: For this, vREST provides a button named

Copy Actual to Expected, that will automatically update the expected response content set within your API tests with the latest value. If you have used any variables inside theExpected Bodytab then vREST will smartly fix the value of your expected body without changing your variables.Change in API response structure: For this, as you are referring to the schema definitions from the OpenAPI/Swagger file, so you will not need to change anything in your API tests. In case of any API response structure changes, then just update the referred schema definitions in the API specification file and you are ready to go.

# 8. Running specification-driven API tests

You may run the specification-driven API tests in the same way as you run any normal test case.

If the response schema assertion fails due to a change in API response structure, then you will get the following type of error in the Results tab.

And if the request schema assertion fails due to a change in API request structure, then you will get the following type of error in the Results tab to quickly detect, why this particular API test fails. In case, if you have ignored certain request field(s) from validation then vREST will pass the assertion but show you ignored assertion errors. In the below screenshot, see the 4th assertion result to check request validation errors.

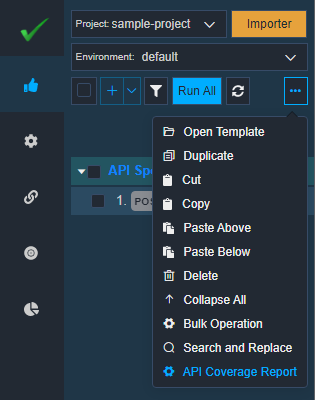

# 9. API coverage reports

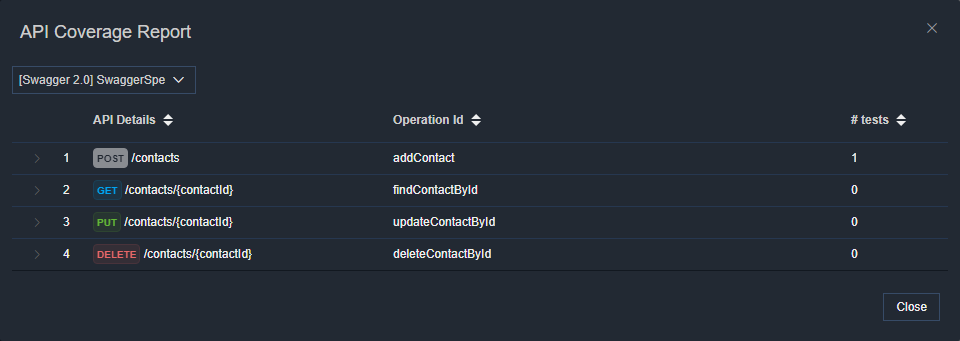

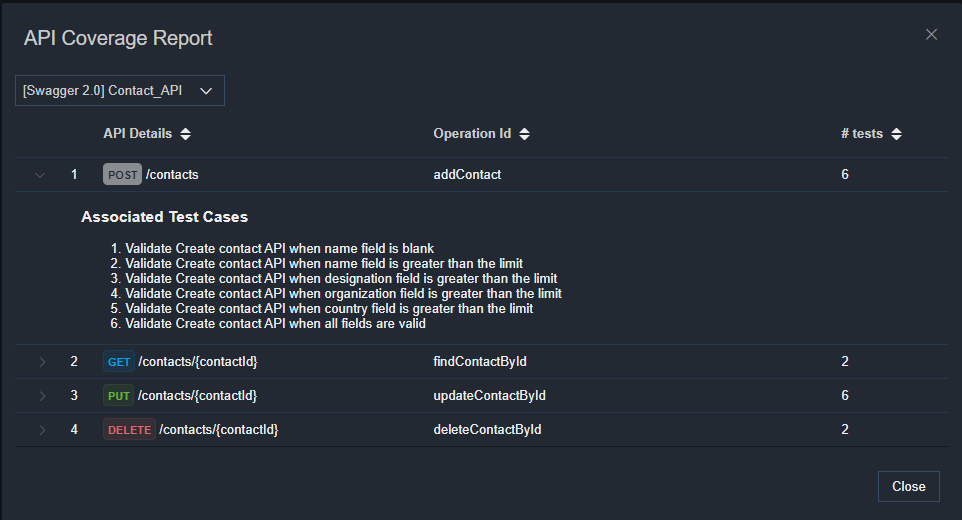

API coverage report will help you in identifying the APIs available, their associated tests. The coverage report can be accessed using the More options available in the left pane.

The coverage report will look like this:

Similarly, we may add other tests as well. API Coverage report will provide the complete status of your API tests against each API operation in the API Specification file, as shown below:

# 10. Sample project to demonstrate specification driven testing in vREST

Implementing specification-driven testing in vREST is just a piece of cake. For a demonstration of specification-driven testing, we will be validating a few of the APIs of our online Contacts Application (this is a CRUD application where you can create, read, update and delete contacts) in the sample project.

You may find the sample project containing test cases and test data files in this Github Repository (opens new window).

# 11. Current Limitations/Known issues

The current limitations/known issues are as follows. We will fix them up in our subsequent releases soon.

allowEmptyValueflag is currently not working during request validation.- Extra query/form parameters if found, are not raising any issues during request validation.