# Setting Up Models (Cloud Provider)

To use a hosted LLM via Groq:

# Step 1: Get Your Groq API Key

Visit: https://console.groq.com/keys (opens new window)

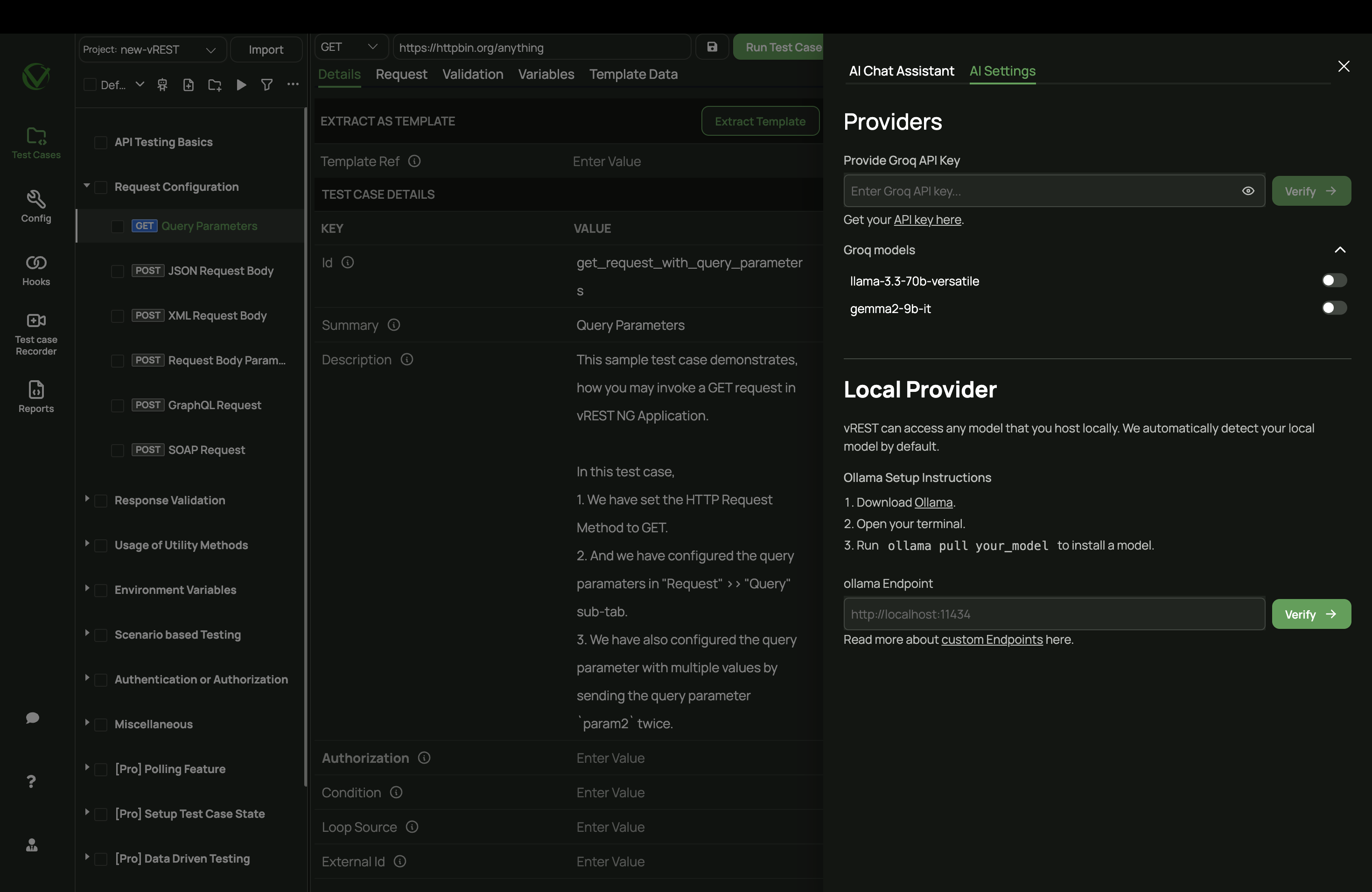

# Step 2: Enter API Key

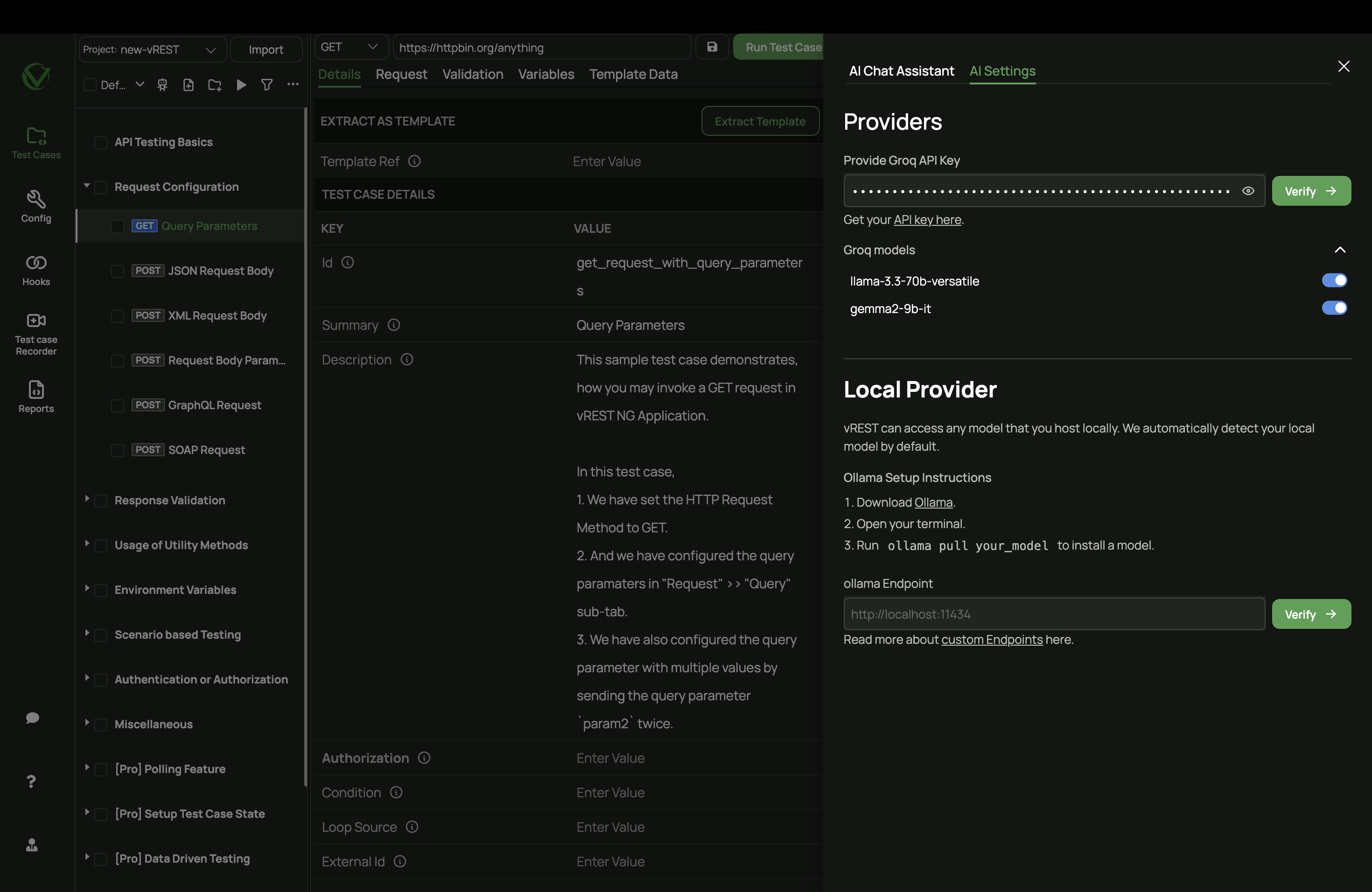

In the AI Settings tab, paste the API key and click Verify.

# Step 3: Enable a Model

Once verified, the available models will be automatically enabled. You can manually toggle them off or on later as needed.

llama-3.3-70b-versatilegemma-2-9b-it